AI Therapy Outperforms CBT Therapists? The Truth Behind the Headlines: Between Sessions January Newsletter

If your clients have mentioned using ChatGPT between sessions or you've seen the viral videos of AI therapy, you're not alone in wondering about the future of our profession.

In this newsletter:

Analysis of AI and CBT Therapy

Upcoming events, discounts and content!

As waiting lists for mental health support grow longer and therapist burnout continues, millions are turning to a new confidant: artificial intelligence. Available 24/7 at a fraction of the cost of traditional therapy, AI promises to revolutionise access to mental healthcare.

But can algorithms truly replicate the human connection at the heart of therapy? And what does this mean for our careers?

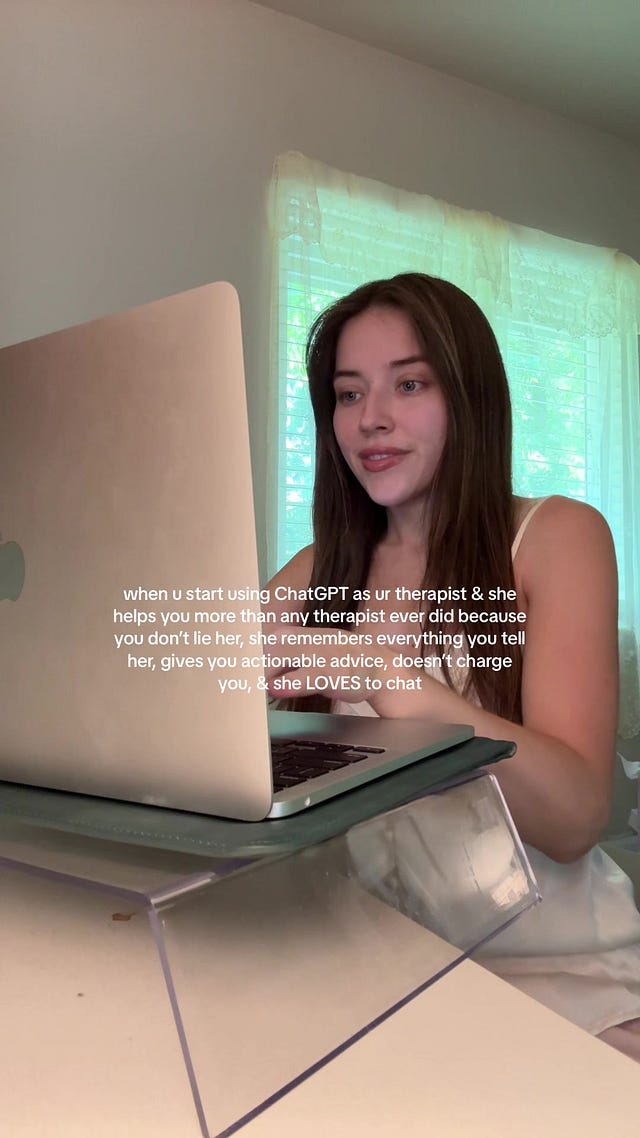

This viral Tiktok video received over 50K+ likes with the caption “when you start using ChatGPT as your therapist & she helps you more than any therapist ever did because you don’t lie to her, she remembers everything, and gives you actionable advice”.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

Can AI Truly Replicate Empathy?

Of the largest barriers to AI replacing human therapists is empathy. Can this uniquely human trait truly be replicated by an AI?

Research1 identifies three types of empathetic engagement in therapy:

AI has been able to replicate number 1 – cognitive empathy. It is able to recognise and understand the emotional states of others.

A simple prompt into Claude.ai about work stress showed an ability to recognise (that sounds really difficult) and simulate understanding (work stress can be overwhelming).

However, AI is unable to replicate emotional and motivational empathy2. AI cannot partake in emotional experiences—it neither shares or experiences joy nor sorrow.

Interestingly, in one study, when participants accessing a therapeutic AI did not know they were messaging with an AI, they felt the responses were authentic and practical3. Another study, found that when participants knew they were talking to an AI, responses seem less authentic and trustworthy4.

This speaks to the inherent importance of the humanity of the therapist and their ability to truly share in distressing emotions.

CBT Therapist Chris Hutchins-Joss adds: “it is crucial to acknowledge that these relationships are qualitatively different from those formed with human therapists . . . genuine empathy, remains irreplaceable in the therapeutic context.”

Current Clinical Use

AI therapists are already in use, with WoeBot and Ionamind delivering CBT techniques via AI.

The NHS has begun to experiment with AI support in Talking Therapies. AI assessment and clinical decision support company, Limbic.ai, claims to reduce staff burnout and drive better patient outcomes, with NHS Bradford and Craven Talking Therapies reporting improved access rates with Limbic

The Talking Therapies service used Limbic AI for initial triage, with the collaboration reporting Limbic determines the correct ADSM with 93% accuracy.

One clinician reported ‘I think it’s fantastic that we have certain details prior to the assessment, such as pronouns, drug and alcohol use, risk, etc . . . it (also) means the client is not having to repeat themselves, and it allows more time to be spent on identifying the main problem, conveying empathy.’

What appears to be developing is AI supporting therapeutic work, rather than replacing.

Does Research Suggest AI Can Go Beyond Basic Support?

AI is therefore already replacing elements of a CBT therapists role. But, can AI really replicate therapy beyond triage and basic information gathering?

One study asked therapists to review transcripts of human-to-human therapy vs AI-to-human therapy. Therapists (n = 63) could only correctly identify which transcripts were human-human 54% of the time, and rated AI transcripts of higher quality5.

However, these were transcripts of basic active listening - there were no CBT techniques present. It’s therefore not clear whether results would be the same if active change methods were taking place.

Nonetheless, trials from Woebot found significant reductions in perceived stress and burnout over an 8 week study period of Woebot AI support6.

However, the scores between Woebot AI vs control group were not statistically significant and the group was primarily sub-clinical.

This points to other issues within the research base, that most of the research on AI remains experimental, with pilot studies dominating the field7.

Expert and Community Perspectives

Psychiatrist and Host of the Mental Health Forecast Arjun Nanda, argues: "Nothing can replace genuine human connection," but he adds "AI can do things therapists can't: be cheap, accessible, and available 24/7."

For example, Woebot markets itself due to it’s easy accessibility.

This poses an interesting dynamic for services like the UK NHS who face long waitlists, and countries for whom no state mental health support currently exists.

Viraj Kulkarni, founder of AI speech therapy app Iyaso, offers a compelling perspective: "AI won't replace a human therapist in most cases because there is no human therapist to replace in most cases! Nine out of ten users of our app have never seen a therapist.”

His point speaks to a developing hierarchy that could exist in the future. AI may not replace therapists, because it may be used by those who never access a human therapist to begin with.

Human therapists therefore may be at the top of the hierarchy - for those who can afford it or at the more in-need levels of the NHS.

Arjun concurs, saying: “therapists that think AI won’t replace them are missing the point. AI will change the landscape of mental health itself”.

He adds “an AI won’t replace you. A human with an AI will . . . which is difficult to reconcile in one of the most uniquely human fields”.

Looking Ahead

This suggests a future where:

AI handles basic interventions, triage and administrative work

Human therapists focus on psychotherapy beyond this

Therapeutic relationships become even more central

Technology augments rather than replaces human connection

As Hutchins-Joss notes: "By staying informed about developments and carefully selecting AI tools that align with ethical guidelines, therapists can harness AI's potential while upholding the highest standards of client care."

However, there are also questions about AI's ability to handle crisis situations and recognise more subtle signs of deteriorating mental health, such as body language.

The liability framework for AI therapeutic interventions also remains unclear - who is responsible when AI misses critical warning signs or needs to make a safeguarding referral?

The future is yet to be certain, but what we can perhaps infer, is that AI will contribute to a two tiered mental health system, but not replace human psychotherapists.

What role do you see AI playing in your practice? How do you feel about it and the direction we are heading? Share your thoughts below!

Coming Up at Between Therapists

Free for All Subscribers

New clinical tools page recently updated with formulation documents.

Premium Content for Paid Subscribers

Upcoming articles:

Integrating AI ethically into your practice and do we want to?

What is poly-vagal theory and should we integrate it into CBT?

Upcoming workshops:

Sign up to which workshop you’d like to attend here: REGISTER.

We will also send reminders closer to the time.

We are also in talks with multiple companies to provide exclusive discounts to members of our community. Watch this space!

Want to access premium content, the community chat and workshops? Upgrade your membership today to join our community of forward-thinking therapists.

Zaki J, Ochsner KN. The neuroscience of empathy: progress, pitfalls and promise. Nat Neurosci. 2012;15(5):675–680. doi: 10.1038/nn.3085.nn.3085

Rubin M, Arnon H, Huppert JD, Perry A. Considering the Role of Human Empathy in AI-Driven Therapy. JMIR Ment Health. 2024 Jun 11;11:e56529. doi: 10.2196/56529. PMID: 38861302; PMCID: PMC11200042.

Lopes E, Jain G, Carlbring P, Pareek S. Talking mental health: a battle of wits between humans and AI. J Technol Behav Sci. 2023 Nov 08;:1–11. doi: 10.1007/s41347-023-00359-6

Hohenstein J, Kizilcec RF, DiFranzo D, Aghajari Z, Mieczkowski H, Levy K, Naaman M, Hancock J, Jung MF. Artificial intelligence in communication impacts language and social relationships. Sci Rep. 2023;13(1):5487. doi: 10.1038/s41598-023-30938-9. doi: 10.1038/s41598-023-30938-9.10.1038/s41598-023-30938-9

Kuhail, M. A., Alturki, N., Thomas, J., Alkhalifa, A. K., & Alshardan, A. (2024). Human-Human vs Human-AI Therapy: An Empirical Study. International Journal of Human–Computer Interaction, 1–12. https://doi.org/10.1080/10447318.2024.2385001

Emily Durden, Maddison C. Pirner, Stephanie J. Rapoport, Andre Williams, Athena Robinson, Valerie L. Forman-Hoffman, Changes in stress, burnout, and resilience associated with an 8-week intervention with relational agent “Woebot”, Internet Interventions, Volume 33, 2023, 100637, ISSN 2214-7829, https://doi.org/10.1016/j.invent.2023.100637.

Bendig, E., Erb, B., Schulze-Thuesing, L., & Baumeister, H. (2022). The next generation: Chatbots in clinical psychology and psychotherapy to foster mental health – A scoping review. Verhaltenstherapie, 32(Suppl 1), 64–76. https://doi.org/10.1159/000501812

Thanks again for writing this! I thought this paper I co-authored might be of interest: https://www.medrxiv.org/content/10.1101/2024.07.17.24310551v2

It's currently in preprint, going through peer review. We investigated an AI powered digital program that I was involved in building.

The main findings were:

- People used the digital program for about 6 hours over 2 months. Most people (78%) stuck with the program and did at least the first few sessions.

- The program really helped reduce people's anxiety symptoms. The improvements were bigger than for people who didn't do the program and as good as seeing a therapist in person.

- The benefits lasted even 1 month after finishing the program, especially for people who started with more severe anxiety.

- The digital program got similar results to seeing a therapist, but it took the therapists much less time (only about 1.5 hours per person on average).

So I think there's some incredible potential here to change lives. I also wonder how factors like solipsistic introjection might influence the nature of the therapeutic relationship.